As part of adopting CD (Continuous Delivery) and embracing a DevOps culture, shifting security left is one of the capabilities needed to drive high software delivery and organisational performance.

Tech companies have identified that security is EVERYONE's responsibility. ie. Security is a distributed ownership model. What shifting left means is including concerns such as security earlier in the development lifecycle.

The principles of shifting left also apply to security, not only to operations. It’s critical to prevent breaches before they can affect users, and to move quickly to address newly discovered security vulnerabilities and fix them.

In software development, there are at least these four activities: design, develop, test, and release. In a traditional software development cycle, testing (including security testing), happens after development is complete. This typically means that a team discovers significant problems, including architectural flaws, that are expensive to fix.

After defects are discovered, developers must then find the contributing factors and how to fix them. In complex production systems, it's not usually a single cause; instead, it's often a series of factors that interact to cause a defect. Defects involving security, performance, and availability are expensive and time-consuming to remedy; they often require architectural changes. The time required to find the defect, develop a solution, and fully test the fix are unpredictable. This can further push out delivery dates.

Research from Accelerate State of DevOps Research and Assessment 2022 (DORA) shows that teams can achieve better outcomes by making security a part of everyone's daily work, instead of testing for security concerns at the end of the process. One key finding is that the largest predictor of an organization's software security practices was not technical but instead cultural. Leveraging Westrum's organizational topology, high-trust, low-blame cultures focused on performance were significantly more likely to adopt emerging security practices than low-trust, high-blame cultures that focused on power or rules. For more on organisational culture and my assessment of the book Accelerate check out my previous blog post

How to implement improved security quality

Get Infosec involved in software design

The Infosec team should get involved in the design phase for all projects. As you can imagine, the ratio of engineers/builders to security within a company rates pretty high. So companies these days need to rethink how to approach shifting security left. I found this great article on how a company adopted the team's topology approach and looked to re-frame security as an enablement team.

This mode helps reduce gaps in capabilities and is suited to situations where one or more teams would benefit from the active help of another team facilitating or coaching an aspect of their work. This is the primary operating mode of an enabling team and provides support to many other teams. It can also help discover gaps or inconsistencies in existing components and services used.

Educating teams on OWASP 10 and API security is extremely important. Understanding AuthZ, excessive data exposure and insufficient logging and monitoring remain high on the list.

Education Programs

Companies like AWS have created a guardian program whereby every stream-aligned team needs a security guardian (or what I like to call a security “champion”). So you cannot deliver value to your customers (release to prod) until these criteria are met. No guardian, no shipping software! 😛

The program consists of workshops and training from the security team (OWASP, threat modelling, AWS Well-Architected framework (security pillar) etc). Due to the ratio of engineers to security, they regard the security team as an enablement function. This ties back to team topologies again and its four-team types (enablement/stream aligned/platform/complicated sub-system).

Instead of “us versus them,” make security part of development from the start, and encourage day-to-day collaboration between both teams.

Security and compliance

Threat modelling

I have observed companies adopting a threat modelling framework as part of the assessment. Frameworks such as STRIDE and PASTA are quite popular. OWASP for example has a modelling tool called Threat Dragon that supports frameworks like STRIDE.

Threat modelling is a set of techniques to help you identify and classify potential threats during the development process — but I want to emphasize that this is not a one-off activity only done at the start of projects.

This is because throughout the lifetime of any software, new threats will emerge and existing ones will continue to evolve thanks to external events and ongoing changes to requirements and architecture. This means that threat modelling needs to be repeated periodically — the frequency of repetition will depend on the circumstances and will need to consider factors such as the cost of running the exercise and the potential risk to the business. When used in conjunction with other techniques, such as establishing cross-functional security requirements to address common risks in the project's technologies and using automated security scanners, threat modelling can be a powerful asset.

AWS provides workshops on threat modelling and educating teams on the approach. GitHub talks about its threat modelling process and how it brings more improved communication between security and the engineering teams and how they are able to "shift left".

Bug bounty programs

Many companies are now adopting and investing in bug bounty programs. Bug bounty programs give you continuous, real-time vulnerability insights across your expanding digital attack surface so you can eliminate critical threat “blind spots” and strengthen your security posture.

Check out this interview with one of the security researchers in the Github Security Bug Bounty program to understand more about their background and how they keep up with the latest vulnerability trends. One program I have used in the past is BugCrowd.

CTF (Capture The Flag) competitions are also a great way to keep engineers engaged (security mindset).

Adopting an NFR (Non-Functional Requirements) checklist in the design phase helps to deliver quality at speed; incorporating performance, security and reliability. One example of compliance is the 4-eyes principle where at least two approvers are needed before merging the PR. CODEOWNERS helps automatically assign individuals (or teams) to review PRs. Below I will elaborate further on the tooling that can enable and automate compliance as part of the development lifecycle early.

Security-approved tools

Security-approved tools help to integrate and automate many tooling into your day-to-day life as an engineer. I have noticed over time the rapid rise of Devsecops and tooling that can help achieve this. The areas that I used and have observed include (non-exhaustive list):

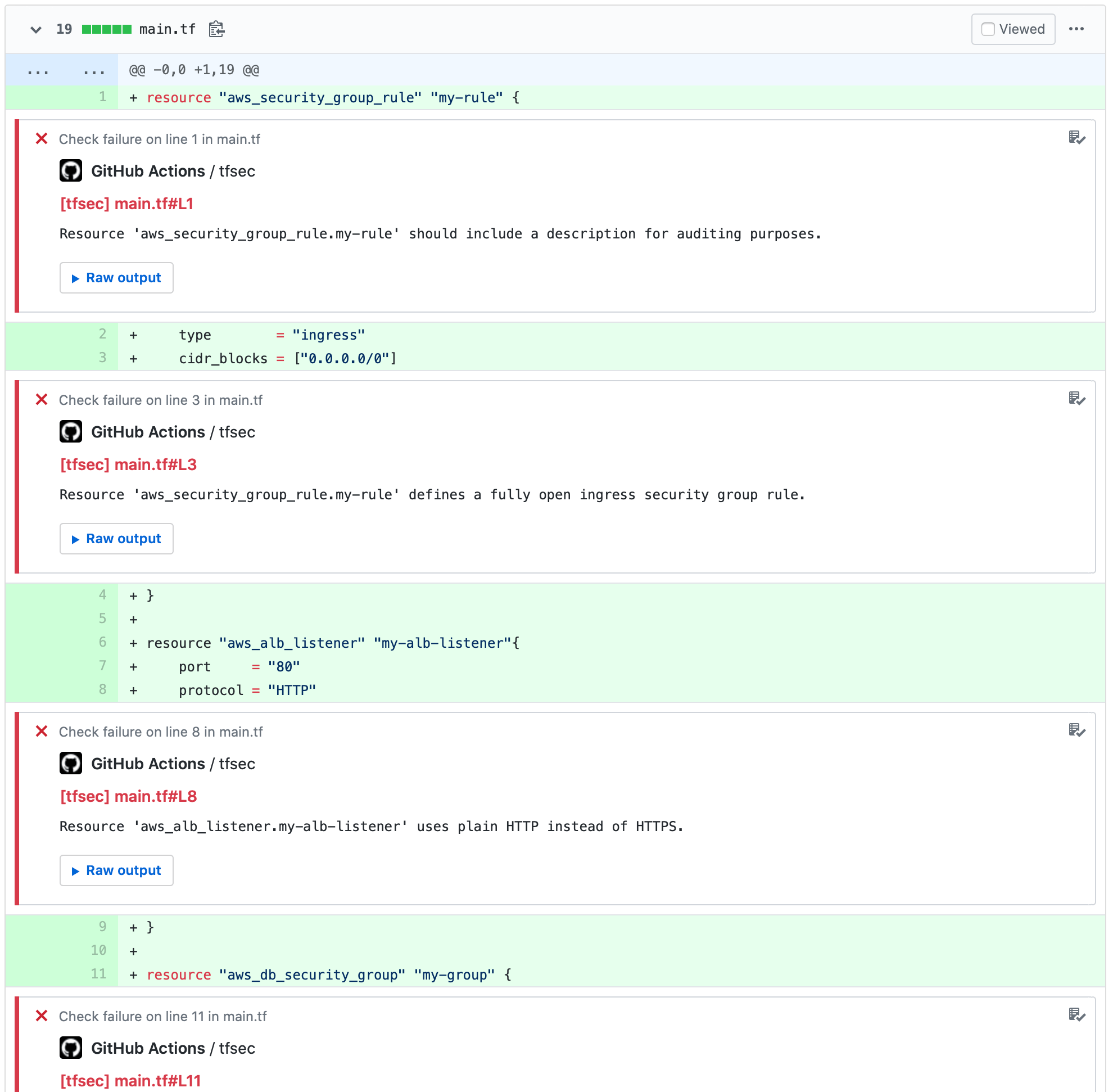

Infra as code scanning

- Tfsec - Uses static analysis of your terraform code to spot potential misconfiguration. Can be easily incorporated into your CI pipeline.

- Checkov by Bridgecrew - Can scan results across platforms such as Terraform, CloudFormation, Kubernetes, Helm, ARM Templates and Serverless framework.

- Snyk supports many formats and can also detect drifts post-deployment.

- Policy enforcement for terraform using conftest - Doordash have a great article on how they ensure reliability and velocity through conftest and Atlantis.

Bots

I am a big fan of chatbots! I watched a presentation from a company recently that built multiple chatbots on shifting security left and helping with remediation.

They had an intern with an interest in python and he created some very nifty chatbots.

Behind the scenes, they use Cloud conformity from Trend Micro. Now when an engineer is naughty and maybe clickops and opens a security group to the world or an s3 bucket, the bot will alert the channel and notify of the issue in real-time (they only broadcast on high and mediums). You can then ask the bot also who made the change. It will then scan Cloudtrail, find the IAM user in question and link it back to the slack handle of the user and post back to the channel who's to “blame” (more in the sense of git blame than to literally blame!). The bot could also perform auto-remediation. This is just an example of the opportunities that exist with chatbots.

Linting (Compliance)

![]()

Linters are tools used to flag programming errors, bugs, stylistic errors and suspicious constructs. Examples include:

- Eslint - is an open-source project that helps you find and fix problems with your JavaScript code.

- TFlint is a pluggable terraform linter that finds possible errors (like invalid instance types) for Major Cloud providers (AWS/Azure/GCP) and enforces best practices.

- Actionlint is a static checker for GitHub workflow files. I use the VSCode extension extensively.

- yamllint is a linter for YAML files.

- oaslint - Spectral is an OAS linter to enforce best practices for API design of Open API Specs. I have introduced this linter before at organisations to align with companies' API guidelines and catch any inconsistencies early in the development lifecycle.

If you are using GitHub actions check out this awesome list of linters that the community recommends.

Security & Version updates

OWASP published last year their top ten, and vulnerable and outdated components moved up to sixth position

Dependabot and Renovate are two tools I have used in the past for dependency updates and vulnerability management. Dependabot is part of the GitHub ecosystem now and is maturing every day. But I do miss the nice presets that Renovate has and how you can group certain dependencies too.

One lesson I have learnt from automating this process is to be aware of dependency fatigue. Work with the team on your schedule and what dependencies are the highest priority. Otherwise, you could end up becoming overwhelmed with PRs and start to ignore updates!

Github talks about how they use dependabot internally to secure their own platform.

OWASP dependency checker is also a useful tool to run as part of your CI pipeline to identify security vulnerabilities.

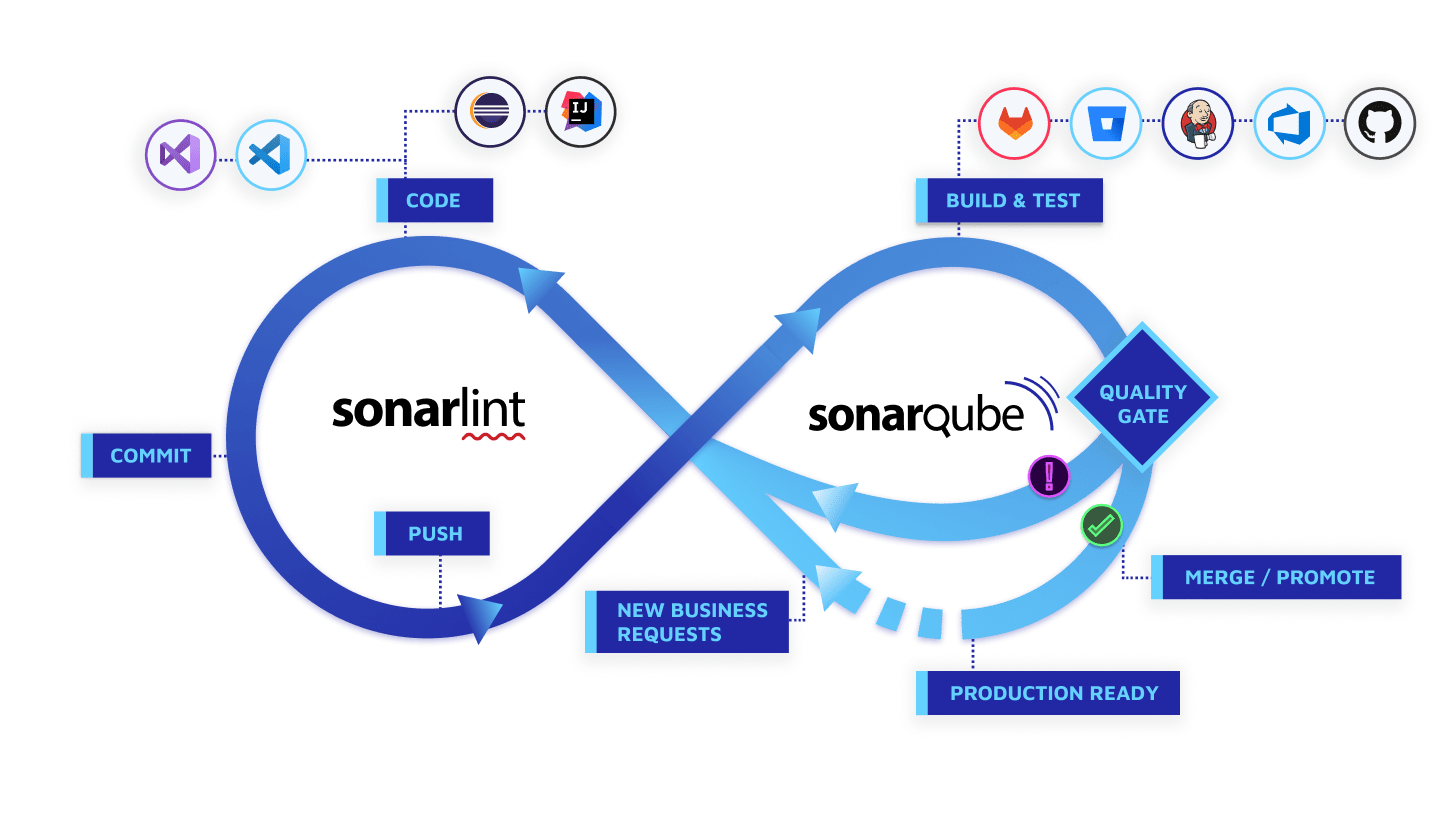

Code scanning (SAST)

SonarQube is a self-managed, automatic code review tool that systematically helps you deliver Clean Code. As a core element of their solution, SonarQube integrates into your existing workflow and detects issues in your code to help you perform continuous code inspections of your projects. The tool analyses 30+ different programming languages and integrates into your CI pipeline and DevOps platform to ensure that your code meets high-quality standards.

Container scanning

Twistlock is now part of Palo Alto’s Prisma Cloud offering and is one of the leading container security scanning solutions.

Snyk also provides a similar product offering to scan images.

I hope you have found this list of security tools useful. Head over to CNCF to find more.

Suffice it to say are we still shifting left? Is it realistic to expect developers to take on the burdens of security and infrastructure provisioning, as well as writing their applications? Is platform engineering the answer to saving the DevOps dream?? I will leave you with that thought! 😉