TL;DR

Recently I had to look at horizontally scaling a traditional web-app on kubernetes. Here i will explain how I achieved it and what ingress controller is and why to use it.

I assume you know what pods are so I will quickly breakdown service and ingress resources.

Service

Service is a logical abstraction communication layer to pods. During normal operations pods get’s created, destroyed, scaled out, etc.

A Service make’s it easy to always connect to the pods by connecting to their service which stays stable during the pod life cycle. A important thing about services are what their type is, it determines how the service expose itself to the cluster or the internet. Some of the service types are :

ClusterIP Your service is only expose internally to the cluster on the internal cluster IP. A example would be to deploy Hasicorp’s vault and expose it only internally.

NodePort Expose the service on the EC2 Instance on the specified port. This will be exposed to the internet. Off course it this all depends on your AWS Security group / VPN rules.

LoadBalancer Supported on Amazon and Google cloud, this creates the cloud providers your using load balancer. So on Amazon it creates a ELB that points to your service on your cluster.

ExternalName Create a CNAME dns record to a external domain.

For more information about Services look at https://kubernetes.io/docs/concepts/services-networking/service/

Ingress

An Ingress is a collection of rules that allow inbound connections to reach the cluster services

You define a number of rules to access a service

Scenario

Imagine this scenario, you have a cluster running, on Amazon, you have multiple applications deployed to it, some are jvm microservices (spring boot) running inside embedded tomcat, and to add to the mix, you have a couple of SPA sitting in a Apache web server that serves static content.

All applications needs to have TLS, some of the api’s endpoints have changed, but you still have to serve the old endpoint path, so you need to do some sort of path rewrite. How do you expose everything to the internet? The obvious answer is create a type LoadBalancer service for each, but, then multiple ELB’s will be created, you have to deal with TLS termination at each ELB, you have to CNAME your applications/api’s domain names to the right ELB’s, and in general just have very little control over the ELB.

Enter Ingress Controllers. 👍

What is an ingress controller?

An Ingress Controller is a daemon, deployed as a Kubernetes Pod, that watches the apiserver's /ingresses endpoint for updates to the Ingress resource. Its job is to satisfy requests for Ingresses.

You deploy a ingress controller, create a type LoadBalancer service for it, and it sits and monitors Kubernetes api server’s /ingresses endpoint and acts as a reverse proxy for the ingress rules it found there.

You then deploy your application and expose it’s service as a type NodePort, and create ingress rules for it. The ingress controller then picks up the new deployed service and proxy traffic to it from outside.

Following this setup, you only have one ELB then on Amazon, and a central place at the ingress controller to manage the traffic coming into your cluster to your applications.

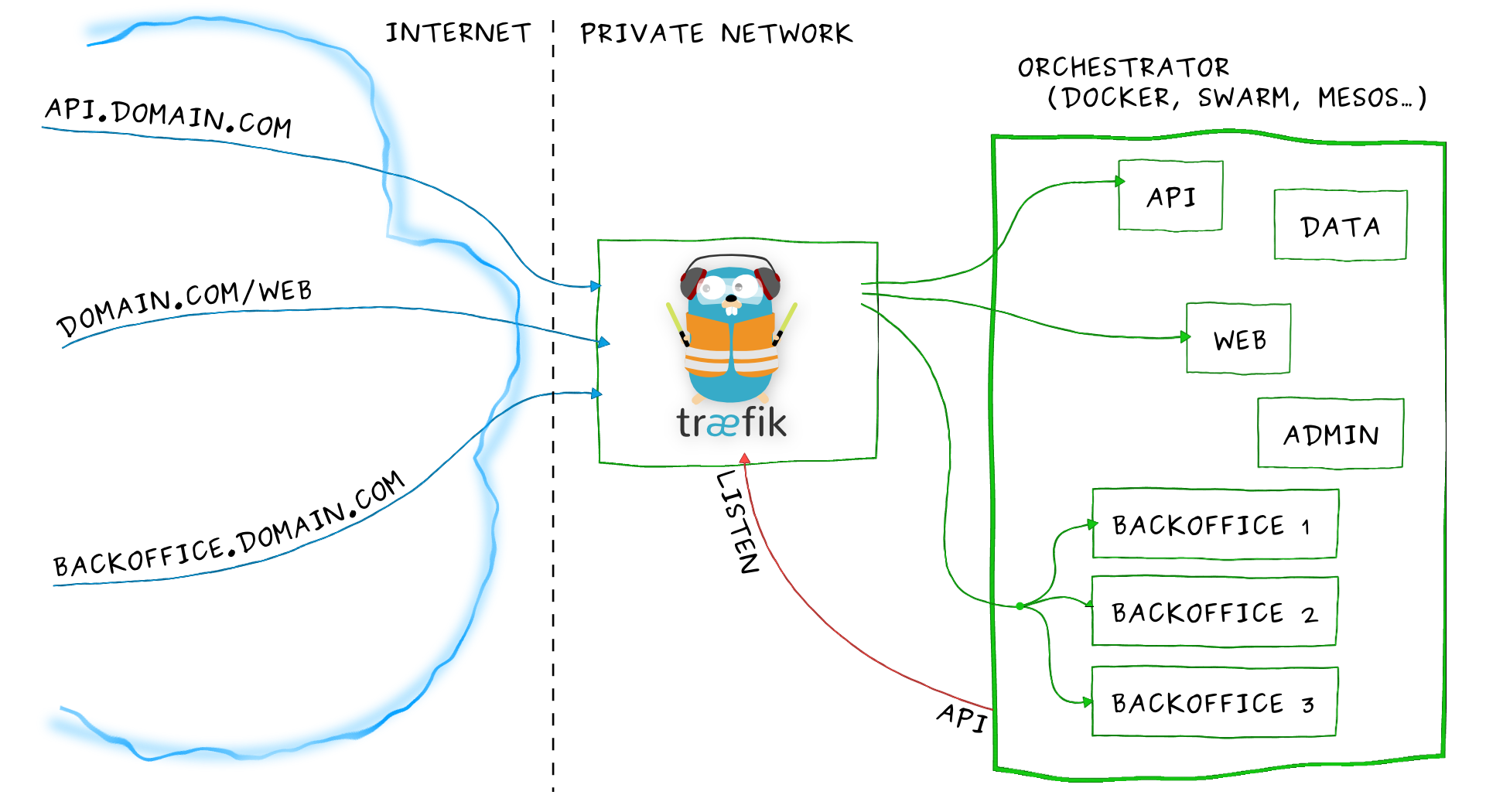

To visualise how this works, check out this little guy! Traefik is one implementation you can use as an ingress.

But I have chosen nginx ingress controller instead as it supports sticky sessions and as a reverse proxy is extremely popular solution.

So lets get to the interesting part; coding!!!

Demo

I am going to setup a kubernetes gossip cluster on AWS using kops. Then create nginx ingress controller and reverse proxy to a sample app called echoheader.

To setup a k8s cluster on AWS, follow the guide at https://github.com/shavo007/k8s-ingress

If you do not want to install kops and the other tools needed, I have built a simple docker image that you can use instead.

https://store.docker.com/community/images/shanelee007/alpine-kops

This includes:

- Kops

- Kubectl

- AWS CLI

- Terraform

Once you have the cluster what we need to do is setup a default backend service for nginx.

The default backend is the default service that nginx falls backs to if if cannot route a request successfully. The default backend needs to satisfy the following two requirements :

serves a 404 page at /

serves 200 on a /healthz

See more at https://github.com/kubernetes/ingress-nginx/tree/master/deploy

Run the mandatory commands and install without RBAC roles.

Then install layer 7 service on AWS

https://github.com/kubernetes/ingress-nginx/tree/master/deploy#aws or install the service defined in my repo

kubectl apply -f ingress-nginx-svc.yaml

When you run these commands, it created a deployment with one replica of the nginx-ingress-controller and a service for it of type LoadBalancer which created a ELB for us on AWS. Let’s confirm that. Get the service :

kubectl get services -n ingress-nginx -o wide | grep nginx

We can now test the default back-end

ELB=$(kubectl get svc ingress-nginx -n ingress-nginx -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')

curl $ELB

You should see the following:

default backend - 404

All good so far..

This means everything is working correctly and the ELB forwarded traffic to our nginx-ingress-controller and the nginx-ingress-controller passed it along to the default-backend-service that we deployed.

Deploy our application

Now run

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/images/echoheaders/echo-app.yaml

kubectl apply -f ingress.yaml

This will create deployment and service for echo-header app. This app simply returns information about the http request as output.

If you look at the ingress resource, you will see annotations defined.

ingress.kubernetes.io/ssl-redirect: "true" will redirect http to https.

To view all annotations check out https://github.com/kubernetes/ingress-nginx/blob/master/docs/annotations.md

One ingress rule is to route all requests for virtual host foo.bar.com to service echoheaders on path /backend. So lets test it out!

curl $ELB/backend -H 'Host: foo.bar.com'

You should get 200 response back with request headers and other info.

Sticky sessions

Now to one of the main features that nginx provides. nginx-ingress-controller can handle sticky sessions as it bypass the service level and route directly the pods. More info can be found here

https://github.com/kubernetes/ingress-nginx/tree/master/docs/examples/affinity/cookie

Update (17/10/2017) examples have been removed from repo! To find out more on the annotations related to sticky session go to https://github.com/kubernetes/ingress-nginx/blob/master/docs/annotations.md#miscellaneous==

To test it out we need to first scale our app echo-headers: Lets scale echo-headers deployment to three pods

kubectl scale --replicas=3 deployment/echoheaders

Now lets create the sticky ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-test-sticky

annotations:

kubernetes.io/ingress.class: "nginx"

ingress.kubernetes.io/affinity: "cookie"

ingress.kubernetes.io/session-cookie-name: "route"

ingress.kubernetes.io/session-cookie-hash: "sha1"

spec:

rules:

- host: stickyingress.example.com

http:

paths:

- backend:

serviceName: echoheaders

servicePort: 80

path: /foo

What this setting does it, instruct nginx to use the nginx-sticky-module-ng module (https://bitbucket.org/nginx-goodies/nginx-sticky-module-ng) that’s bundled with the controller to handle all sticky sessions for us.

kubectl apply -f sticky-ingress.yaml

There is a very useful tool called kubetail that you can use to tail the logs of a pod and verify the sticky session behaviour. To install kubetail check out https://github.com/johanhaleby/kubetail

Now in one terminal window, we can tail the logs

kubetail -l app=echoheaders

and in another send in multiple requests to the virtual host stickyingress.example.com

curl -D cookies.txt $ELB/foo -H 'Host: stickyingress.example.com'

while true; do sleep 1;curl -b cookies.txt $ELB/foo -H 'Host: stickyingress.example.com';done

When the backend server is removed, the requests are then re-routed to another upstream server and NGINX creates a new cookie, as the previous hash became invalid.

As, you can see, requests are sent to the same pod for every subsequent request.

Proxy protocol

Lots of times you need to pass a user’s IP address / hostname through to your application. A example would be, to have the hostname of the user in your application logs.

To enable passing along the hostname, enable the below annotation

service.beta.kubernetes.io/aws-load-balancer-proxy-protocol: '*'

Update 1(7/10/2017) It looks like this is not needed anymore

For more see https://github.com/kubernetes/ingress-nginx#source-ip-address

To conclude, i have showcased above a subset of features for ingress. Others include path-rewrite, TLS termination, path routing, scaling, rbac, auth and prometheus metrics. For more info check out resources below.

Resources

For more information visit:

Github project : https://github.com/shavo007/k8s-ingress

Kubernetes nginx ingress: https://github.com/kubernetes/ingress-nginx

External DNS: https://github.com/kubernetes-incubator/external-dns/blob/master/docs/tutorials/nginx-ingress.md

Kubernetes faqs:

https://github.com/hubt/kubernetes-faq/blob/master/README.md#kubernetes-faq

Alpine-kops: https://store.docker.com/community/images/shanelee007/alpine-kops