TL;DR

Voice interfaces are taking off, but how advanced are they becoming? Are we at a point where they can become an automated agent, allowing us to put down our keypads and have a dialog just as if they were a friend or trusted colleague? I’ve set out to test this using Alexa, and building a custom skill (that recently got certified on amazon marketplace).

You can find the skill on the amazon marketplace here

Alexa Machine Learning

Amazon has enabled software developers to write custom skills that are published to the Alexa platform. These custom skills act as an application that can be invoked from the voice interface similar to basic features that come with the Alexa.

Here is an example of a phrase that invokes the Australia calendar application.

Alexa, ask Australia Calendar whats the next holiday in state Victoria?

The Alexa Skills Kit establishes the framework for how the powerful machine learning algorithms that Amazon has developed can be leveraged.

A key concept within the platform is around the learning algorithms, and teaching Alexa what “might” be the expected outcome of a particular phrase. A simple example to understand this pattern is the following.

State {AUSPOST_STATE-SLOT}

In Alexa terminology, the overall phrase is called an “Utterance”, and what’s in the brackets is referred to as a “Slot”. So when someone states “State Victoria” or “State Queensland”, both of these have the same intent, it’s just that the state is a variable that is defined in the slot. When modeling the application, the developer will establish possible choices that might be in the slot (i.e. Victoria, Tasmania, Queensland etc.)

This structure is outlined by the developer as part of writing a custom skill, and once approved by the Alexa team, the model (including custom slots) is ingested into the platform which then influences the algorithms. This type of teaching is common in machine learning, and is useful for establishing patterns.

Once Alexa deciphers the spoken word, it translates into one of these patterns, then invokes the API provided by the developer, passing over which pattern was uttered, along with any variables from slots.

More on custom slots can be seen here

When authoring an Alexa Skill (the custom “app”) the challenge is how to establish all the different ways in which a question or statement could be made, then building custom slots for variables within the utterance. That’s where the engineering comes in by the developer (me!) to take advantage of the underlying machine learning in the Alexa platform. If the pattern matching isn’t effective, that’s a problem with the machine learning and the platform itself.

Voice User Interface Design (Thoughtful code writing matters)

Once the processing is done on Alexa, an API call is made to a micro-service developed by me using aws lambda (serverless compute service). The quality of the user interaction is very dependent upon how “flexible” the skill is written, and that effort has been put into understanding the difference between a visual/keyboard interface and a voice driven one.

Alexa flow

Trying to create a good skill requires picking some potential flows that a narrative may go through between a user and Alexa. The current version of the skill splits into one direction.

Architecting the solution

There is the pattern leveraging calendar files where the data is relatively static, and it’s more important to organize it in such a manner that allows navigation through voice commands. There's an ongoing process that can refresh it over time. Going deeper, let's explore the following utterance.

“Alexa, ask Australia Calendar to find next public holiday by state Victoria”

The dialog with this question will be determining what calendar file to get. In this example, the data is static (there aren't new holidays being created every day), and the interaction will be around navigating a list of calendar events.

In this use case, we can invoke the API ahead of an individual user request, and organize and cache the data in s3 bucket. This improves performance, and simplifies the runtime model. Here’s a view of how this looks using the websites calendar files and how the data is staged.

An S3 bucket is used to store the data, and is persisted in a ical file object. Given the durability of S3, this ensures that the data is always accessible at runtime for the skill and we don't have to hammer the website again and again for what the types of calendar events are in each state.

Building the Skill

The code is organized into a series of functions that are invoked based on the intent. Here are sample mappings from utterances to intent for this skill.

GetNextHolidayIntent what is the next holiday for {State}

GetNextHolidayIntent what is the next holiday for state {State}

GetNextHolidayIntent what is the next public holiday for {State}

GetNextHolidayIntent what is the next public holiday for state {State}

GetNextHolidayIntent find next public holiday in state {State}

GetNextHolidayIntent find next public holiday by state {State}

GetNextHolidayIntent find next public holiday by state

Each of the intents have their own functions, and then gather the data for the response by leveraging cached data in the local memory of the skill, or by calling out to a S3 bucket. Here's a mapping of where the data is retrieved from:

| Intent | Function | Data |

|---|---|---|

| GetNextHolidayIntent | GetNextHolidayIntent() | S3 Bucket |

Home Cards

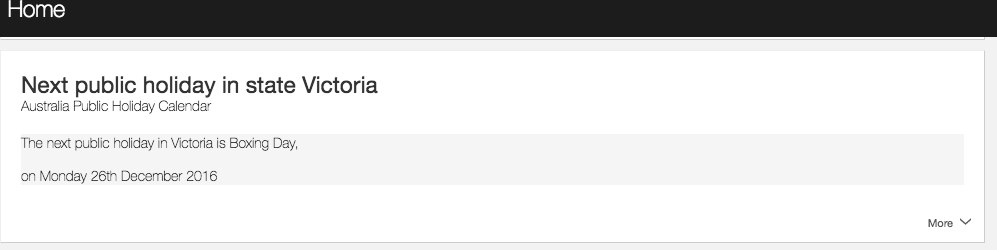

Interactions between a user and an Alexa device can include home cards displayed in the Amazon Alexa App, the companion app available for Fire OS, Android, iOS, and desktop web browsers. These are graphical cards that describe or enhance the voice interaction. A custom skill can include these cards in its responses.

I have created a card for the returned holiday response for future reference. You can see an example below:

Stay tuned for more skills!!